About ProjektMagazin

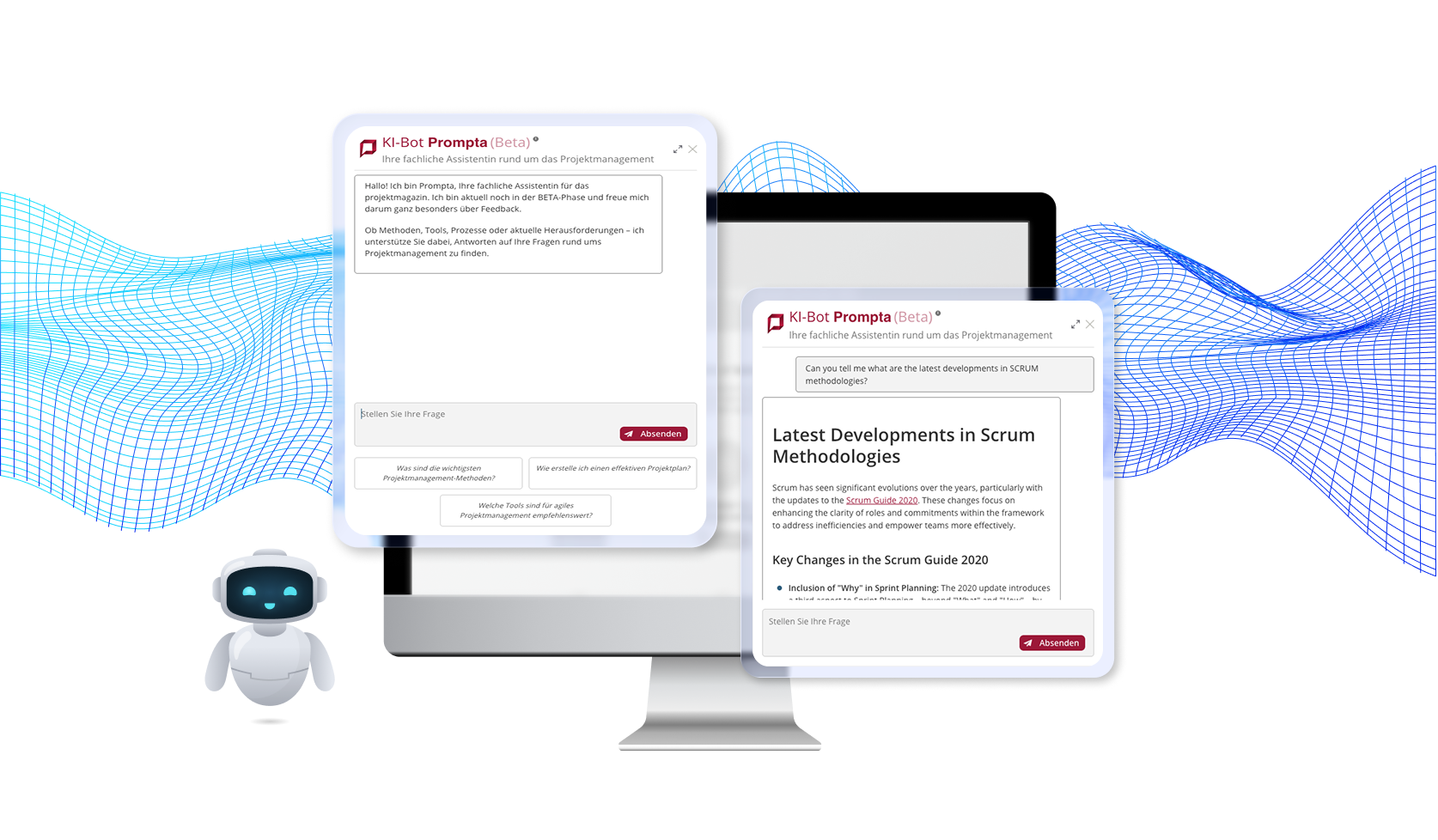

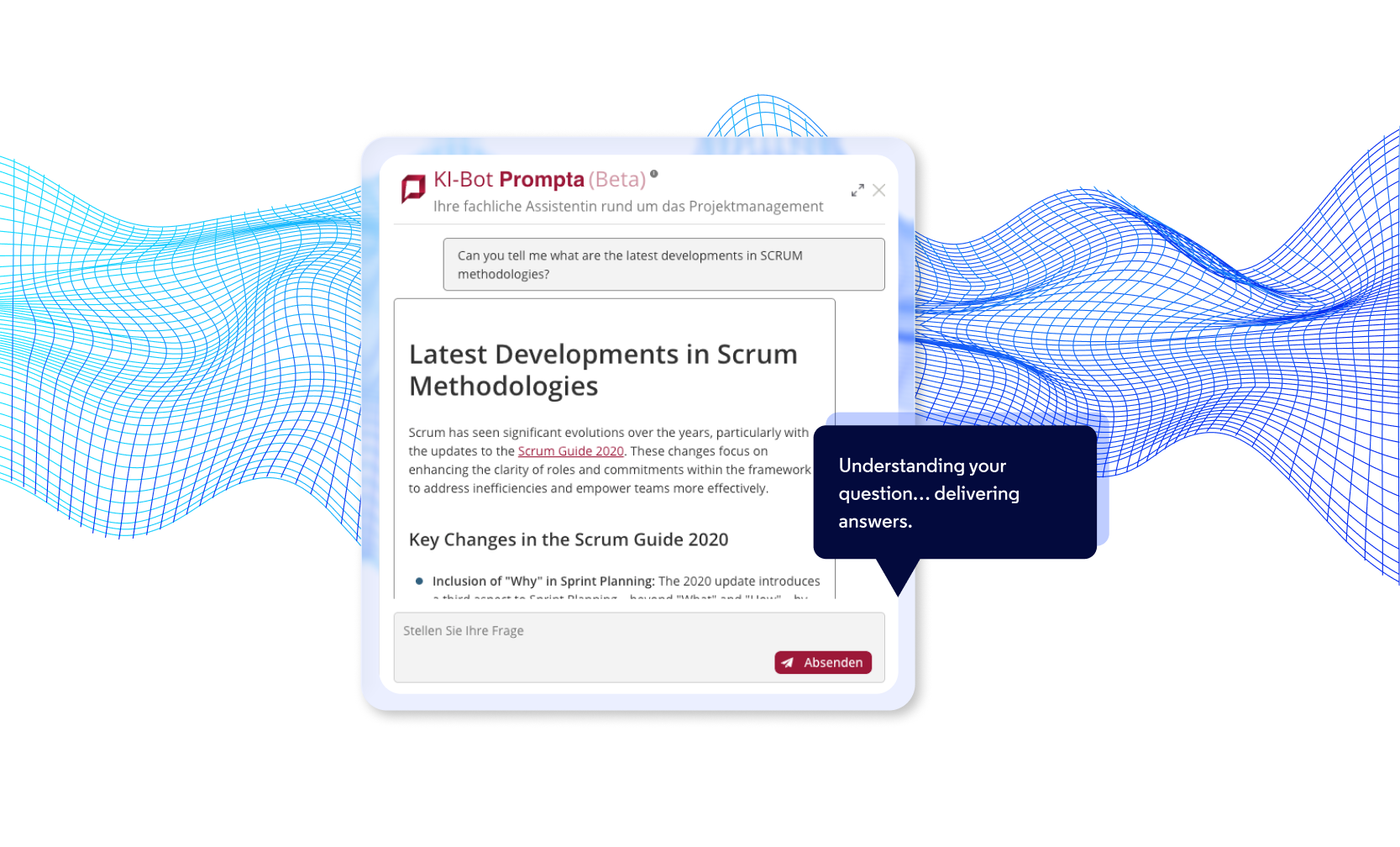

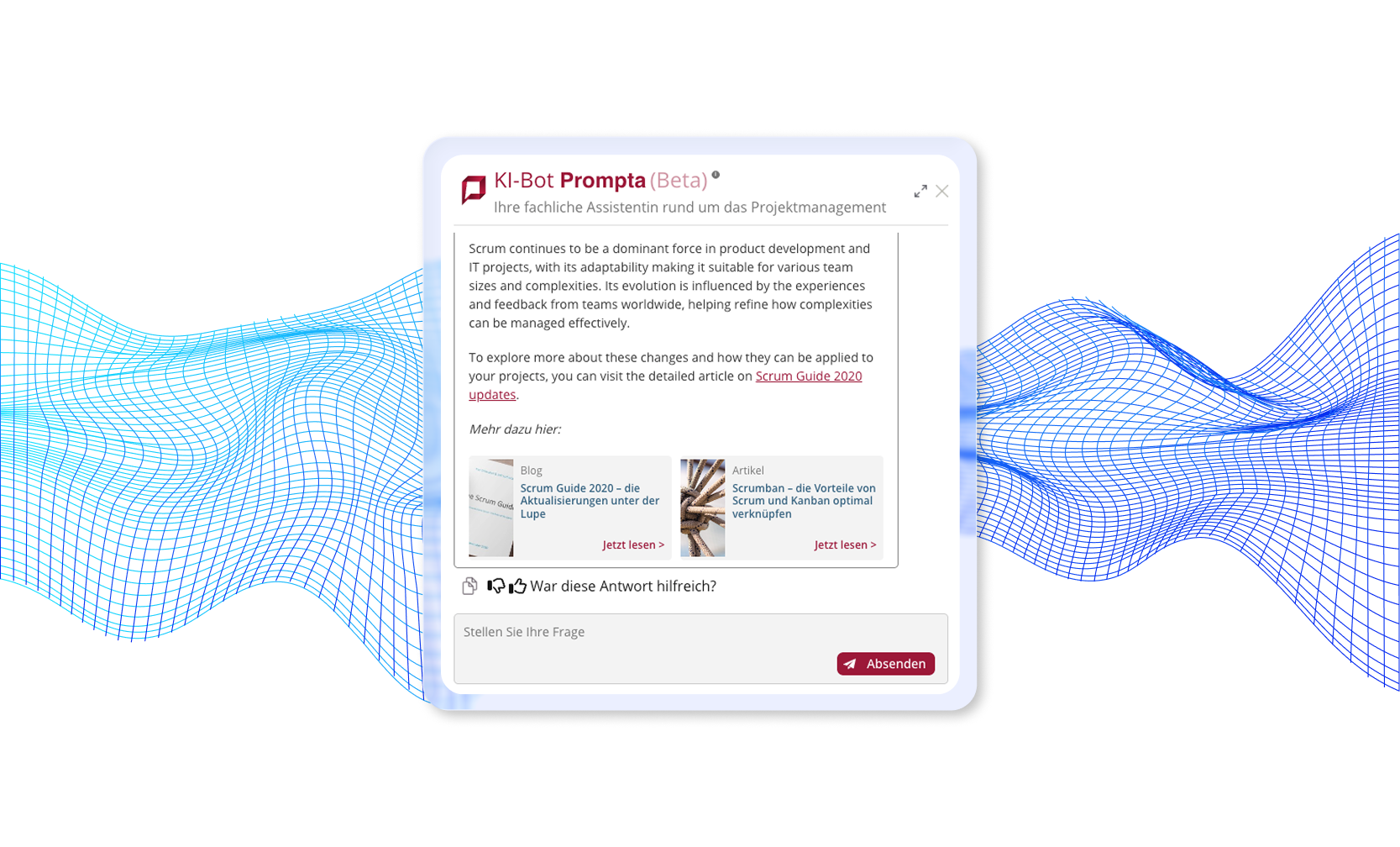

What we built

Business impact and operational improvements

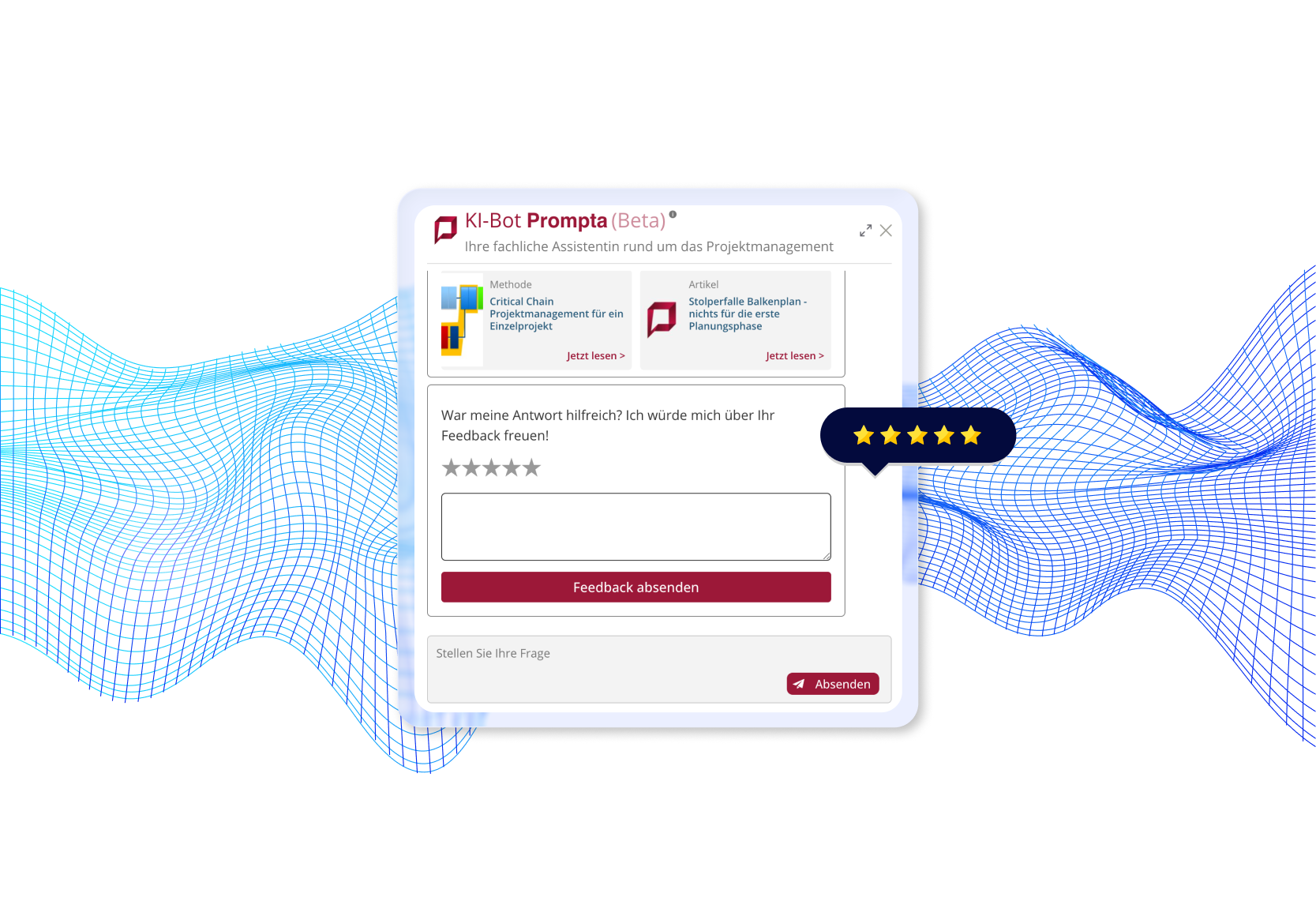

The client reports high user satisfaction and has already identified visual UI enhancements as the next phase of improvements—a clear signal that the core functionality meets all their needs.

Technology choices

We selected our technology stack based on production reliability, scalability, and long-term maintainability.

Read more about the technical aspects of this project on our blog

In our articles, we describe how we ensured the chatbot’s knowledge stays up to date, how we selected the right tools and frameworks to build a stable RAG pipeline, and which techniques we used to reduce response times and improve the precision of generated answers.