AI Automators transforms complex AI workflows from code to configuration. This case study reveals how BetterRegulation built production-grade AI workflows processing 200+ documents monthly with 95%+ accuracy – using multi-step chains, background queues, and admin-managed prompts. No custom integration code required.

In this article:

- What is AI Automators?

- Project context

- When to use AI Automators vs custom code

- How do AI Automator entities work?

- How to build a multi-step AI workflow with chains?

- How to manage prompts in AI Automators?

- How to integrate AI Automators with Drupal forms?

- Queue integration with RabbitMQ

- How to monitor and debug AI Automators?

- Real-world example: BetterRegulation

- What are the best practices for AI Automators?

- AI Automators: benefits and real-world results

- Want to implement AI Automators in your Drupal platform?

What is AI Automators?

AI Automators is a submodule of the Drupal AI module that provides a framework for building automated workflows in Drupal, particularly powerful for AI integrations. An AI Automator can be a single operation (like text summarization), while an AI Automator Chain creates multi-step workflows where each operation’s output becomes the next operation’s input.

Think of it as a workflow engine for complex, multi-step processes that lets you build AI workflows through admin configuration rather than code. It provides provider abstraction, meaning it works seamlessly with OpenAI, Anthropic, local models, and more. The workflows you create become reusable components. Build once, apply to multiple content types.

Without AI Automators, integrating AI into Drupal means writing custom code for every workflow, manually integrating with each API provider, hardcoding prompts into your codebase, dealing with repetitive boilerplate, and managing complex orchestration logic yourself. It’s doable, but time-consuming and brittle.

With AI Automators, you configure workflows through the UI, manage prompts in the admin interface where non-developers can refine them, switch AI providers without code changes, reuse workflows across content types, and build multi-step processes visually. The shift from code to configuration dramatically reduces development time and enables iteration by domain experts.

BetterRegulation uses AI Automators to handle document categorization where 15 fields are auto-populated from PDF analysis, summary generation that creates three different summary types (long, short, and obligations), background processing via queues for time-intensive operations, and comprehensive error handling and logging throughout the workflow.

This guide shows you how to leverage AI Automators for your AI workflows.

Project context

This deep dive is based on BetterRegulation’s real-world implementation. They had an existing Drupal 11 platform and wanted to add AI capabilities without disrupting their editorial workflow or requiring extensive custom development.

The challenges were multi-faceted. Multiple content types – Know How documents, General Consultations, and Station content – all needed AI processing, but each required different approaches. Real-time categorization worked for some workflows, while background summary generation made more sense for others. The legal editors, who understood document taxonomy nuances better than anyone, needed the ability to refine prompts based on accuracy testing without waiting for developer cycles. And crucially, infrastructure was already in place: RabbitMQ was handling other queuing needs, Drupal 11 was running in production, and the environment was stable – they didn’t want to introduce major architectural changes.

The solution was to use AI Automators to configure AI workflows through the admin UI rather than writing custom code for each integration. This architectural decision enabled rapid iteration during development and, more importantly, gave domain experts (the legal editors) the ability to refine prompts based on real-world accuracy without developer involvement.

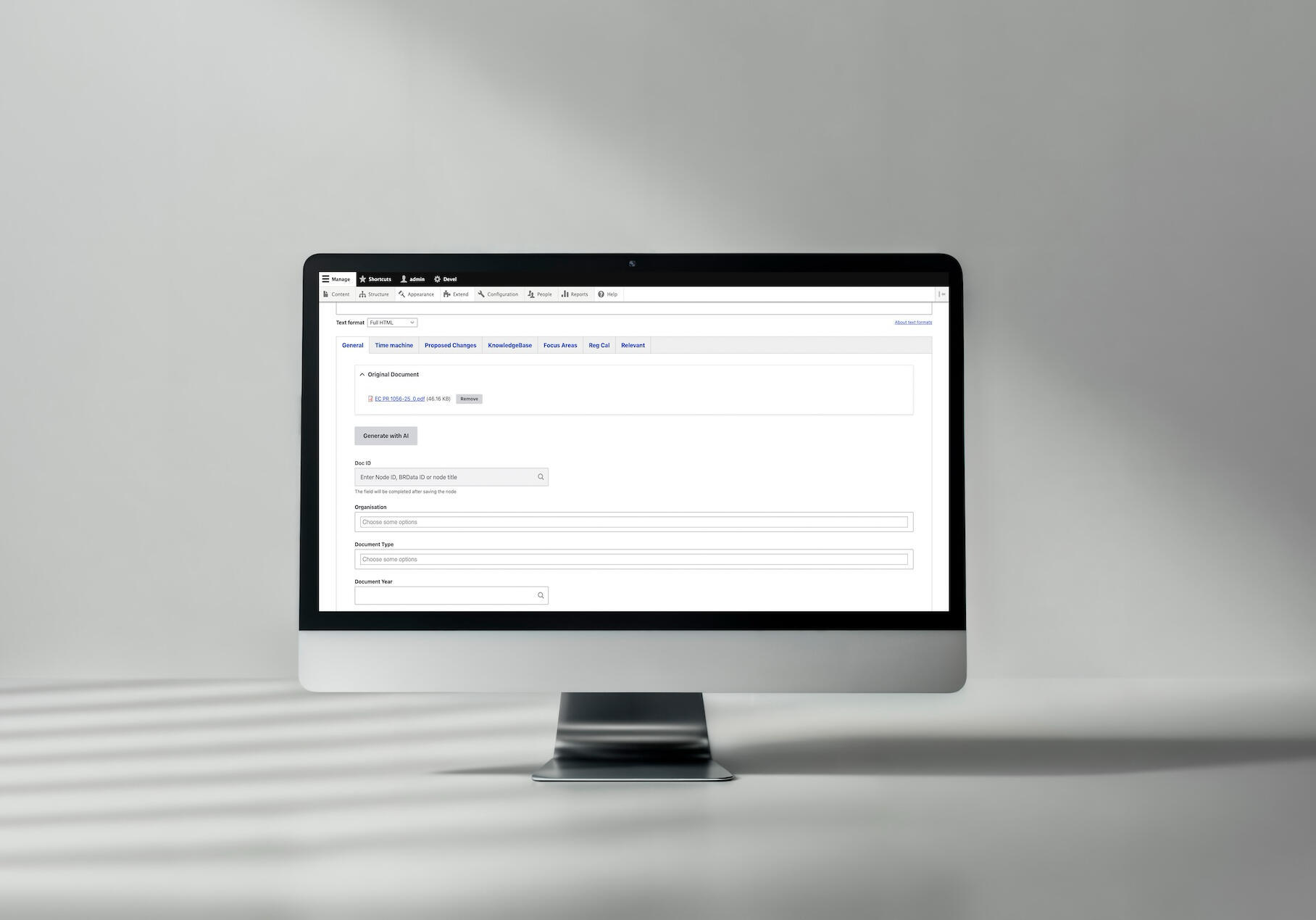

An example of an AI workflow implementation for document categorization at Better Regulation

See the full AI Document Categorization case study here →

When to use AI Automators vs custom code

Not every AI integration needs AI Automators. The decision depends on your workflow complexity, team structure, and iteration speed requirements.

AI Automators shine for complex workflows

AI Automators becomes valuable when you’re dealing with multi-step workflows – think PDF extraction, then text analysis, then result parsing and field population. Each step depends on the previous one, and coordinating this manually means writing orchestration code that quickly becomes complex.

The module really proves its worth when you need multiple AI operations working together. Categorization, summarization, and analysis might all run on the same content, each with different prompts and models. AI Automators handles this coordination through configuration rather than code.

Non-technical configuration is another major advantage. When legal editors or domain experts need to refine prompts based on accuracy testing, giving them admin UI access beats waiting for developer availability every time. Your iteration cycle drops from days to minutes.

If you want flexibility across AI providers – testing whether Claude performs better than GPT for your specific use case, or keeping options open as the AI landscape evolves – AI Automators abstracts away provider-specific code. Switch models through configuration, not code deployment.

Rapid iteration during development and testing becomes straightforward. Clone an automator, modify prompts, test on real documents, compare results. No Git branches, no deployment pipelines – just configure, test, refine.

Finally, AI Automators helps with standardization. When you have multiple content types needing similar AI processing, creating reusable workflow patterns prevents code duplication and ensures consistency.

When custom code makes more sense

Custom code remains the better choice for highly specialized logic that doesn’t fit the workflow model. If your business rules involve complex conditional branching, external system orchestration, or algorithmic processing beyond AI calls, custom services give you full control.

Performance-critical paths might also warrant custom code. While AI Automators adds minimal overhead, if you’re processing thousands of documents per hour and every millisecond counts, direct API integration eliminates abstraction layers.

For complex integrations involving multiple external systems with intricate coordination requirements, custom code provides the flexibility to handle edge cases that workflow configuration can’t easily express.

And sometimes you just have a single, simple operation – one API call, straightforward processing. Adding workflow orchestration would be overkill. Direct integration is cleaner.

BetterRegulation’s choice

BetterRegulation chose AI Automators because their needs aligned perfectly with the module’s strengths. They had multiple workflow steps – PDF extraction, text cleaning, AI analysis, result parsing, and field population – that needed orchestration. Their legal editors, the domain experts who best understood taxonomy nuances, needed to refine prompts without going through developers. They wanted the flexibility to test different AI models as the landscape evolved. A consistent approach across document types was essential for maintainability. And during development, rapid iteration mattered more than squeezing out every millisecond of performance.

The result: faster initial development, easier ongoing maintenance, and more flexibility to adapt as requirements evolved.

Read also: AGENTS.md Tool: How AI Actually Speeds Up Drupal Work

How do AI Automator entities work?

Understanding the fundamental building blocks of AI Automators helps you design effective workflows. The module organizes processing around three key concepts that work together to transform inputs into outputs.

The core concept

An AI Automator in the module can represent either a single AI operation or a complete multi-step workflow. When you need multiple steps working together, you create an AI Automator Chain – a sequence where each operation’s output becomes the next operation’s input.

Anatomy of an AI Automator Chain:

An automator chain contains three main parts: Automator Base Fields that provide the input data (like an uploaded PDF file), output fields that store results from each processing step (like extracted text and AI responses), and the chain configuration that defines the sequence of operations (extract text from PDF, analyze with AI, parse results, and populate fields).

Automator base field (input)

The Automator Base Field is the module’s term for the input data source. This field provides the raw material that your workflow will process – whether that’s a PDF file, text content, an image, or other data requiring transformation or analysis.

In BetterRegulation’s implementation, the Automator Base Field is field_pdf_file, which holds the uploaded PDF document that flows through the extraction and analysis pipeline. The module can also use Automator Input Mode to select between basic mode (one field) or advanced mode (multiple fields via tokens), giving you flexibility in how data enters your workflow.

Output fields (results)

Output fields (sometimes called AI Automator Output Fields in the documentation) store intermediate and final results from workflow execution. Unlike the Automator Base Field which holds the raw input, output fields capture the “after” state – what emerges from each processing step in your chain.

Typical output fields include extracted text from PDFs, raw AI responses (usually JSON), processing metadata like timestamps and token counts, and error messages when things go wrong. In an AI Automator Chain, these fields form a pipeline where one step’s output becomes the next step’s input.

BetterRegulation’s implementation uses field_extracted_text to store the clean text extracted from PDFs and field_ai_response to hold the raw JSON response from GPT-4o-mini. This separation of concerns proves valuable in several ways.

Separate output fields enable debugging by letting you inspect what happened at each step. They support caching strategies where extracted text can be reused for different prompts without re-processing the PDF. They provide auditing capabilities by tracking exactly what the AI returned before any parsing or transformation. And they enable reprocessing workflows where you can re-run later steps without re-extracting earlier data – particularly useful when refining prompts.

Chain configuration

The chain configuration defines your workflow – the sequence of AI Automators executed in order. This is where your multi-step logic lives. According to the module documentation, chains are created as temporary, bundleable entities with one field per AI step, processing data through several stages within a unified workflow.

Each step in the chain has four key components. The type defines what operation to perform, whether that’s text extraction, AI analysis, parsing, or another operation – in the module’s terms, this might be a text completion automator, an image analysis automator, or a custom automator plugin. The source specifies which field(s) to use as input, pulling data from either the Automator Base Field or previous steps’ output fields. The configuration contains parameters specific to this step, like the Automator Prompt, model selection, or processing options. And the output determines which field to write results to, making them available for subsequent steps or final use.

The module also uses Automator Weight to control the execution order when multiple automators are applied to the same field – a lower weight runs first.

How to build a multi-step AI workflow with chains?

Let’s build BetterRegulation’s document summarization workflow as an AI Automator Chain – a multi-step process where each automator’s output feeds into the next.

Chain example: document summarization

Goal: generate three summary types (long, short, obligations) from a PDF document.

Chain steps:

The chain consists of four sequential AI Automators. First, the PDF file is sent to Unstructured.io for text extraction, producing clean text in an output field. Second, that text goes to GPT-4o-mini with a prompt requesting a comprehensive long summary. Third, the long summary gets condensed into a short version through another GPT call. Finally, the original extracted text is analyzed again with a different prompt focused specifically on extracting legal obligations.

Why this sequence?

This workflow design reflects practical constraints and optimization opportunities. Parallel processing isn’t possible because the short summary depends on the long summary – you can’t condense what doesn’t exist yet. The extracted text gets reused across multiple summary operations rather than re-extracting the PDF three times, saving processing time and API costs.

Step 1: PDF → text (Unstructured.io)

The first step uses a custom text extraction plugin that integrates with Unstructured.io. The automator retrieves the PDF from the Automator Base Field, sends it to the Unstructured.io API with high-resolution layout analysis enabled, receives back structured elements (titles, paragraphs, list items), filters out noise like headers and footers, concatenates the clean content with proper spacing, and saves the result to an output field. This produces clean, AI-ready text from complex PDF layouts.

Step 2: Text → long summary (GPT)

The second step uses AI Automators’ built-in text completion feature. It takes the extracted text and sends it to OpenAI’s GPT-4o-mini model with a prompt that requests a comprehensive summary focused on the document’s purpose, scope, affected parties, key themes, and context. The temperature setting is low (0.3) to ensure consistent, factual summaries rather than creative variations. The prompt uses token substitution to inject the extracted text dynamically, and the AI’s response gets saved to another field for use in subsequent steps.

Step 3: Long summary → short summary (GPT)

The third step takes the long summary from step 2 and condenses it using a different prompt focused on distillation rather than creation. This approach uses fewer tokens since it’s processing a summary instead of the full document text. Keeping this as a separate step allows regenerating the short summary without regenerating the long one, and makes it easier to optimize each summary type independently based on user feedback.

How to manage prompts in AI Automators?

One of AI Automators’ most powerful features is the ability to manage prompts through the admin UI rather than hardcoding them. This enables rapid iteration and gives domain experts direct control over workflow refinement.

Storing and editing prompts

Admin UI location: /admin/config/ai/automators for managing automators and chains. Each automator can have an Automator Prompt configured through the admin interface.

Benefits of UI-managed prompts:

Managing Automator Prompts through the UI rather than hardcoding them brings several advantages. You can edit prompts without code changes, meaning no deployments, no Git commits, no waiting for CI/CD pipelines. Non-developers – the editors and domain experts who actually understand content nuances – can refine prompts themselves without involving developers. A/B testing becomes straightforward: clone a chain configuration, modify the Automator Prompt, test with real documents, and compare results. Version control remains intact because Automator Prompts live in Drupal configuration, which can be exported, tracked, and rolled back just like code. And the iteration cycle shrinks from days to minutes – modify the prompt, click save, test immediately.

BetterRegulation workflow:

- Developer creates initial prompt.

- Legal editor reviews and suggests improvements.

- Edit prompt in admin UI.

- Test with 10-20 documents.

- Measure accuracy.

- Refine and repeat.

Result: prompts improved from 85% to 95% accuracy through iteration.

Token injection

Automators supports dynamic token replacement, letting you build prompts that adapt to the content being processed.

The available tokens include {{ field_name }} to reference any field from the Automator Base Field or previous processing steps’ output fields, {{ node:title }} for the node title, {{ node:created }} for creation date, {{ user:name }} for the current user, and custom tokens you define through hook_token_info(). This flexibility means your prompts can reference entity data, user context, or any other Drupal token.

Prompts can use multiple tokens to create context-aware instructions. For example, a prompt might reference the content type, title, creation date, and user role to generate summaries tailored to specific audiences or document types – all through simple token placeholders that get replaced automatically during execution.

Read also: LangChain vs LangGraph vs Raw OpenAI: How to Choose Your RAG Stack

How to integrate AI Automators with Drupal forms?

AI Automators can be triggered from Drupal’s content editing forms, giving editors direct control over when processing happens. The implementation approach depends on whether you need immediate results or can process in the background.

Triggering AI Automators from UI

Option 1: Button click (real-time processing)

For document categorization, BetterRegulation adds a “Generate with AI” button to the content edit form using Drupal’s form alter system. When clicked, the button triggers an AJAX-enabled handler that shows a progress indicator while processing. The handler loads the automator configuration, passes in the uploaded PDF file, executes the workflow, retrieves the AI’s JSON response, parses it, and populates form fields with the extracted values. The form rebuilds automatically to show the populated fields, allowing editors to review and adjust before saving.

Option 2: Automatic triggering background processing

For summary generation, the system uses Drupal’s entity hooks to automatically queue processing when a document is created or updated. The queue system adds a job with a 15-minute delay timestamp, ensuring the document has time to settle before expensive AI processing begins. This approach requires no user interaction – summaries generate automatically in the background.

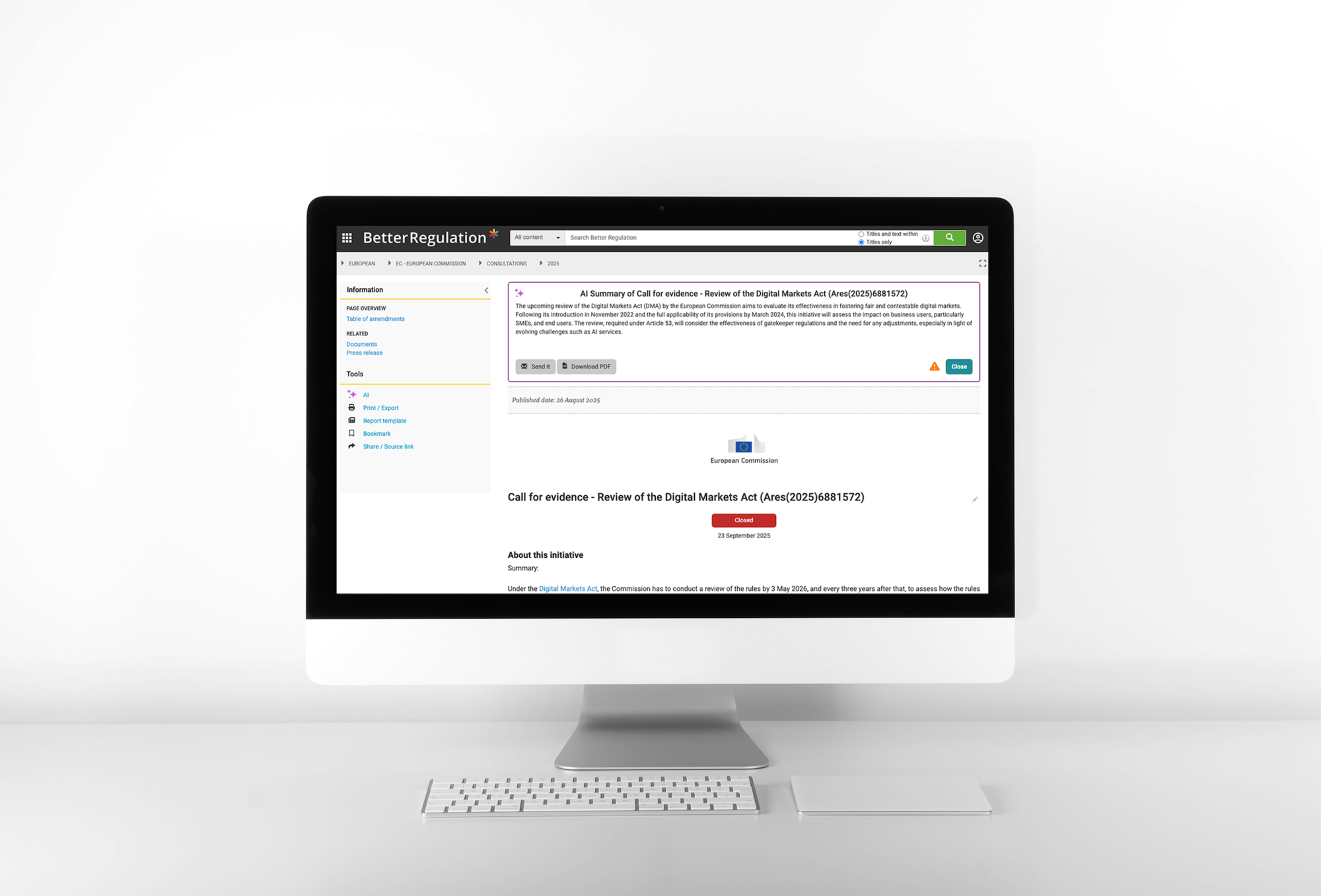

An AI-driven workflow for automated document summaries implemented for Better Regulation

See the full AI Document Summaries case study here →

Real-time vs background processing

Real-time (synchronous):

Use real-time processing when users need immediate results. Document categorization is a good example – editors upload a PDF, click “Generate with AI,” and wait while fields populate. Processing typically takes 10 seconds to 2 minutes. The UX includes a progress indicator and AJAX form updates showing populated fields as they become available.

Background (asynchronous):

Background processing works when results aren’t needed immediately. Summary generation fits this pattern – editors don’t need summaries to complete their work, so processing can happen later. Duration can be longer since it’s not blocking user work, and summaries simply appear when ready without any waiting.

BetterRegulation’s approach:

Categorization runs in real-time because editors need those fields populated before saving the document – the taxonomy assignments are part of the core content creation workflow. Summaries run in the background because there’s no rush, and they shouldn’t block the editorial workflow. Editors can create, categorize, and save documents immediately while summaries generate behind the scenes.

User feedback during processing

For real-time processing, the system displays a throbber (spinning indicator) with an estimated time message, keeping users informed while processing happens. When complete, success messages show how many fields were populated and remind editors to review the results. Error messages provide specific failure reasons, helping editors understand what went wrong and whether to retry or handle the document manually.

Queue integration with RabbitMQ

For time-intensive AI operations, queuing provides crucial benefits over synchronous processing. BetterRegulation uses RabbitMQ to handle background processing, with a clever delay mechanism that prevents redundant API calls.

Why queue Automator execution?

Synchronous processing creates several problems in production environments. UI blocking means users wait 30-60 seconds while processing completes, unable to do other work. Timeout risk emerges when long processing operations exceed PHP or web server timeout limits, causing failures. Redundant processing happens when an editor makes five quick edits in succession, triggering five expensive AI calls for essentially the same document. And peak load issues arise because all processing happens during business hours when servers are already busy.

Queues solve these problems elegantly. Background processing is non-blocking – users continue working while processing happens in the background. Workers operating outside the web request cycle have unlimited time, eliminating timeout concerns. The delay mechanism (more on this shortly) prevents redundant processing by consolidating multiple rapid edits. And load distribution becomes possible, processing documents during off-peak hours when server resources are more available.

The 15-minute delay explained

The problem BetterRegulation faced:

Editors would: 1. Create document (triggers summary generation). 2. Realize title is wrong (edit and save). 3. Add more metadata (edit and save). 4. Fix typo (edit and save). 5. Final review (edit and save).

Result: 5 save operations in 10 minutes = 5 expensive AI summary generations for the same document.

The solution: delay queue execution

The implementation checks if a document is already queued. If it is, the system simply resets the delay timer to 15 minutes from now. If it’s not queued, it creates a new queue item with a 15-minute delay timestamp. Queue workers check the delay timestamp before processing – if the delay hasn’t elapsed, they re-queue the item for later. If it has elapsed, they proceed with summary generation.

Result: an editor making 5 edits in 10 minutes triggers just one AI call (15 minutes after the last edit), saving 4 unnecessary API calls and their associated costs.

The delay duration is configurable through Drupal’s configuration system, allowing administrators to adjust the timing based on their editors’ workflows and cost considerations.

RabbitMQ + Drupal integration

Why RabbitMQ?

RabbitMQ provides reliable message delivery, persistence that survives server restarts, an acknowledgment system that prevents lost jobs, dead letter queues for handling failed jobs, and easy scalability by adding more workers.

Note: BetterRegulation already had RabbitMQ configured on their project for other queuing needs. The AI implementation leveraged this existing infrastructure.

Setup involves installing the Drupal RabbitMQ module via Composer, configuring connection credentials in settings.php (host, port, username, password, virtual host), and defining queue services in a services.yml file. The configuration connects Drupal’s queue system to RabbitMQ, replacing the default database-based queuing.

Worker configuration

Supervisor (Process Manager):

BetterRegulation uses Supervisor to manage queue workers. The configuration runs 2 worker processes continuously, each processing the queue for 1 hour before restarting to prevent memory leaks. If a worker crashes, Supervisor automatically restarts it, maintaining consistent processing capacity. All output gets logged for debugging.

For local development, Docker Compose mounts the Supervisor configuration and starts supervisord. For production, Kubernetes runs worker pods with resource limits (512Mi memory, 500m CPU) and replica configuration (3 workers for redundancy and throughput). This containerized approach ensures consistent worker behavior across environments.

How to monitor and debug AI Automators?

Production AI workflows need comprehensive monitoring to catch failures, track performance, and optimize costs. The module integrates with Drupal’s logging system and can be extended with custom monitoring solutions.

Watchdog integration

AI Automators automatically logs to Drupal’s Watchdog system. You can enhance this by implementing custom hooks that log execution times for each step, track which automator ran, and capture detailed error information including stack traces when processing fails. Some implementations also send admin notifications when failures occur, ensuring problems don’t go unnoticed.

Admin views for processing status

Create an admin view at /admin/reports/ai-processing-status that displays recently processed documents with their status (success/failed/queued), processing duration, token usage, and any error messages. Include fields for node title and link, processing timestamp, status indicator, duration, and error details. Add filters for status, date range, and content type so admins can quickly find problematic documents or analyze patterns. Sort by timestamp descending to show the most recent processing first.

Failed job handling

Implement automatic retry logic for transient failures like network errors or API timeouts. The system attempts processing up to 3 times before giving up, incrementing an attempt counter with each retry. Non-retriable errors (like malformed data) fail immediately without retries.

Configure RabbitMQ with dead letter queues so failed jobs automatically move to a separate queue for admin review rather than disappearing. This ensures no document gets lost – admins can inspect failed jobs, fix underlying issues, and manually retry if needed.

Read also: How to Speed Up AI Chatbot Responses with Intelligent Caching

Real-world example: BetterRegulation

Let’s look at BetterRegulation’s actual production chains to see how the concepts covered earlier come together. These examples show real configuration choices and the reasoning behind them.

Chain example: document categorization

The document categorization chain takes a PDF file as the Automator Base Field and produces extracted text and AI response JSON as intermediate output fields. It runs three automators in sequence: first, extract text from the PDF using Unstructured.io with high-resolution analysis; second, send the extracted text to GPT-4o-mini with a comprehensive Automator Prompt containing all taxonomy options and request JSON output (temperature 0.1 for consistency, max 2000 tokens); third, parse the JSON response and populate the node’s taxonomy and metadata fields.

Chain example: summary generation

The summary generation chain also starts with a PDF file as its base field and creates four output fields: extracted text, long summary, short summary, and obligations summary. It runs four automators in sequence: extract text (same as categorization), generate a comprehensive long summary from the full text, condense that long summary into a short version, and extract legal obligations from the full text using a specialized prompt. This chain is triggered by the queue system and runs in the background with a 15-minute delay.

Read also: Real-Time Data Synchronization for RAG. How to Keep Your AI Chatbot’s Knowledge Fresh

What are the best practices for AI Automators?

These lessons come from BetterRegulation’s implementation and can help you avoid common pitfalls. Apply them when designing your AI workflows to build more maintainable and reliable systems.

1. Design for debugging

Include intermediate result fields like extracted text, raw AI responses, and processing logs. When something goes wrong, you can inspect each step’s output to pinpoint where the failure occurred – whether it’s PDF extraction quality, AI response formatting, or field population logic.

2. Modular prompts

Avoid creating one massive prompt that tries to do everything. Instead, separate prompts for separate concerns – one prompt for categorization focusing on taxonomy matching, another for summarization focusing on clear explanations, and a third for obligations extraction focusing on requirements.

This modular approach brings several benefits. Each prompt becomes easier to optimize because you’re tuning for one specific task rather than juggling competing concerns. You can test prompts independently, isolating what works and what doesn’t. Prompts become reusable across workflows – your summarization prompt can work for multiple content types. And instructions stay clearer when they’re focused on a single goal rather than trying to communicate everything at once.

3. Version your Automator Chains

Treat your AI Automator Chains like code – use Drupal’s configuration management to export them. Running drush config:export saves your chains and automators to the config directory (e.g., config/sync/), putting them under version control alongside your code.

This brings standard version control benefits to your AI workflows. You can track prompt changes over time (since Automator Prompts are part of the configuration), understanding what worked and what didn’t. Rollback becomes possible when a prompt change reduces accuracy. And deployment across environments becomes reliable – development, staging, and production can sync automator and chain configurations just like they sync other site configuration.

4. Test with real data

Create a test automator by cloning your production configuration. Modify prompts in the test version, process 20-30 real documents, and compare accuracy against production. If the test version performs better, promote it to production. This approach lets you iterate safely without risking production accuracy.

5. Monitor token usage

Implement token usage logging that tracks input and output tokens for each processing run, estimates costs based on current API pricing, and stores the data with timestamps. Generate monthly reports showing documents processed, total tokens consumed, and estimated costs. This visibility helps spot cost spikes, optimize prompts for efficiency, and forecast budgets.

6. Graceful degradation

Plan for failures by wrapping automator execution in try-catch blocks. When processing fails, log the error with full details, set default values to keep the workflow moving, flag the content for manual review, and allow publishing to continue. Don’t let AI failures block critical workflows – editorial processes should degrade gracefully rather than halt completely.

7. Security

Validate all inputs before processing. Check file MIME types (PDF only), enforce size limits (e.g., 50MB maximum), and scan uploads for malware if handling user-submitted files. Implement rate limiting to prevent abuse – for example, limit users to 20 AI processing requests per hour. These safeguards protect against malicious uploads, resource exhaustion, and cost abuse.

AI Automators: benefits and real-world results

AI Automators transforms AI integration from complex custom code into configurable workflows.

Key benefits:

- Configuration over code - build workflows through admin UI.

- Rapid iteration - test different prompts and models quickly.

- Non-developer access - domain experts can refine prompts.

- Multi-step orchestration - chain operations seamlessly.

- Provider abstraction - switch between OpenAI, Claude, etc.

- Production-ready - built-in error handling and logging.

BetterRegulation’s results with AI Automators:

The production system now processes over 200 documents monthly with greater than 95% accuracy in categorization. Less than 5% of fields require editor correction – a level of accuracy that makes the human-in-the-loop approach practical rather than burdensome. Time savings reached 50% compared to fully manual processing, and one full-time equivalent was freed to focus on higher-value work like policy analysis and strategic content planning.

If you’re building AI features in Drupal, AI Automators (part of the AI module) significantly reduces development time and complexity. The configuration-over-code approach means less custom code to maintain, faster iteration cycles, and domain expert involvement in workflow refinement. Whether you need a single AI Automator for one operation or an AI Automator Chain for complex multi-step workflows, the module provides the framework you need.

Start simple: Build one automator for one content type. For multi-step workflows, create an AI Automator Chain linking operations together. Test thoroughly with real data. Measure results. Then expand to additional content types and workflows, applying lessons learned from the first implementation.

Want to implement AI Automators in your Drupal platform?

This case study is based on our real production implementation for BetterRegulation, where we built multi-step AI workflows that process over 200 documents monthly with 95%+ accuracy. The system has been running in production since 2024, delivering consistent results with automated document categorization, summary generation, and background queue processing – saving 50% of manual processing time.

Interested in building AI workflows for your Drupal site? Our team specializes in creating production-grade AI Automators implementations that balance accuracy, performance, and maintainability. We handle everything from initial architecture design and workflow configuration through to production deployment and optimization. Visit our generative AI development services to discover how we can help you leverage AI Automators for your content workflows.